- Xen is a virtual machine monitor for x86 that supports execution of multiple guest operating systems with unprecedented levels of performance and resource isolation. This package contains the libraries and header files needed to create tools to control virtual machines.

- 1) Sorry but I didn't understand your reply about Xen. I didn't run any application on server, server just immediately stop responding after I install WinPKFilter. The network driver info is. Name: Xen Net Device Driver; Provider: Xen GPL PV Driver Developers; Driver Date: 3/12/2010; Driver Version: 0.11.0213; Service Provider: vpsland.com.

- 1Virtual Network Interfaces

- 3Bridging

- 4Open vSwitch

- 5Routing

- 6Network Address Translation

- 9ASCII Art Examples of Xen Networking Topologies

Xenvbd is the driver that interfaces between the Windows scsiport miniport driver and the Linux blockback driver. Almost all of the Windows scsiport code runs at a very high IRQL, and there is a long list of things that cannot be done at a high IRQL. Getting The Xen Project Hypervisor. The Xen Project hypervisor is available as source distribution from these download pages. You can find instructions on how to build the Xen Project source release at this page. Sources for Xen Project Binaries. The Xen Project Hypervisor is the basis for many commercial products.

Paravirtualised Network Devices

A Xen guest typically has access to one or more paravirtualised (PV) network interfaces. These PV interfaces enable fast and efficient network communications for domains without the overhead of emulating a real network device. Drivers for PV network devices are available by default in most PV aware guest OS kernels. In addition PV network drivers are available for various guest operating systems when running as a fully virtualised (HVM) guest, e.g. via PV on HVM drivers for Linux or the GPL PV drivers for Windows.

A paravirtualised network device consists of a pair of network devices. The first of these (the frontend) will reside in the guest domain while the second (the backend) will reside in the backend domain (typically Dom0). A similar pair of devices is created for each virtual network interface

The frontend devices appear much like any other physical Ethernet NIC in the guest domain. Typically under Linux it is bound to the xen-netfront driver and creates a device ethN. Under NetBSD and FreeBSD the frontend devices are named xennetN and xnN respectively.

The backend device is typically named such that it contains both the guest domain ID and the index of the device. Under Linux such devices are by default named vifDOMID.DEVID while under NetBSD xvifDOMID.DEVID is used.

In both cases the device naming is subject to the usual guest or backend domain facilities for renaming network devices. For the remainder of this document the default Linux naming, that is ethN for frontend and vifDOMID.DEVID for backend devices, will be used.

The front and backend devices are linked by a virtual communication channel, guest networking is achieved by arranging for traffic to pass from the backend device onto the wider network, e.g. using bridging, routing or Network Address Translation (NAT).

Emulated Network Devices

As well as PV network interface fully virtualised (HVM) guests can also be configured with one or more emulated network devices. These devices emulate a real piece of hardware and are useful when a guest OS does not have PV drivers available or when they are not yet available (i.e. during guest installation).

An emulated network device is usually paired with a PV device with the same MAC address and configuration. This allows the guest to smoothly transition from the emulated device to the PV device when a driver becomes available.

The emulated network device is provided by the device model, running either as a process in domain 0 or as a Stub Domain.

When the DM runs as a process in domain 0 then the device is surfaced in the backend domain as a tap type network device. Historically these were named either tapID (for an arbitrary ID) or tapDOMID.DEVID. More recently they have been named vifDOMID.DOMID-emu to highlight the relationship between the paired PV and emulated devices.

If the DM runs in a stub domain then the device surfaces in domain 0 as a PV network device attached to the stub domain. The stub domain will take care of forwarding between the device emulator and this PV device.

For the remainder of this document PV and Emulated devices are mostly interchangeable and we will use the PV naming in the examples.

Virtualised network interfaces in domains are given Ethernet MAC addresses. By default most Xen toolstacks will select a random address, depending on the toolstack this will either be static for the entire life time of the guest (e.g. Libvirt, XAPI or xend managed domains) or will change each time the guest is started (e.g. XL or xend unmanaged domains).

In the latter case if a fixed MAC address is required e.g. for using with DHCP then this can be be configured using the mac= option to the vif configuration directive (e.g. vif = ['mac=aa:00:00:00:00:11']). See XL Network Configuration for more details of the syntax.

When choosing MAC addresses there are in general three strategies which can be used. In decreasing order of preference these are:

- Assign an address from the range associated with an Organizationally Unique Identifier (OUI) which you control. If you do not know what this means then you likely do not control an OUI and this option does not apply to you.

- Generate a random sequence of 6 bytes, set the locally administered bit (bit 2 of the first byte) and clear the multicast bit (bit 1 of the first byte). In other words the first byte should have the bit pattern xxxxxx10 (where x is a randomly generated bit) and the remaining 5 bytes are randomly generated. See wikipedia for more details the structure of a MAC address.

- Assign a random address from within the space 00:16:3e:xx:xx:xx. 00:16:3e is an OUI assigned to the Xen project and which has been made available for Xen users for the purposes of assigning local addresses within that space.

A MAC address must be unique among all network devices (both physical and virtual) on the same local network segment (e.g. on the LAN containing the Xen host). For this reason if you do not have your own OUI to use it is in general recommended to generate a random locally administered address (the second option above) rather than using the Xen OUI (the third option) since it gives 46 bits of randomness rather than 24 which significantly reduces the chances of a clash.

The default (and most common) Xen configuration uses bridging within the backend domain (typically domain 0) to allow all domains to appear on the network as individual hosts.

In this configuration a software bridge is created in the backend domain. The backend virtual network devices (vifDOMID.DEVID)) are added to this bridge along with an (optional) physical Ethernet device to provide connectivity off the host. By omitting the physical Ethernet device an isolated network containing only guest domains can be created.

There are two common naming schemes when using bridged networking. In one scheme the physical device eth0 is renamed to peth0 and a bridge named eth0 is created. In the other the physical device remains eth0 while the bridge is named xenbr0 (or br0 etc). We shall use the eth0+xenbr0 naming scheme here.

Of course you are free to use whatever names you like, including descriptive names (e.g. 'dmz', 'internal', 'external' etc).

Setting up bridged networking

The recommended method for configuring bridged networking is to use your distro supplied network configuration tools as described in Host Configuration/Networking.

Prior to Xen 4.1 when xend started up it would run the network-bridge script which would reconfigure any existing physical network configuration into a bridged network configuration i.e. it would create a bridge, move the IP address from the physical device to the bridge, add the physical device to the bridge etc. However this was fragile and prone to breaking and therefore is no longer recommended.

After Xen 4.1 xend will only do this if no bridges currently exist, so as to avoid overwriting any locally configured network configuration.

The XL toolstack will never modify the network configuration and expects that the administrator will have configured the host networking appropriately. Check out this XL example.

Attaching virtual devices to the appropriate bridge

When a domU starts up the vif-bridge script is run which:

- attaches vifDOMID.DEVID to the appropriate bridge

- brings vifDOMID.DEVID up.

With XL and xend the bridge to use for each VIF can be configured using the bridge configuration key. e.g.

or

or to create multiple interfaces attached to different bridges:

Bridging Loops

It is common practice to disable the Spanning Tree Protocol on Xen bridges. However if guests are able to themselves bridge two or more interfaces together then you run the risk of creating bridging loops. See Xen Bridge Loop for more discussion of this issue.

Links

Some relevant topics from the mailing list:

Many of the links presented here are rather old and may refer to configurations which are no longer best practice, such as the use of the network-* scripts to configure networking. |

- eth0 IP in dom0 2005/01/14

- Bridging vs. Routing 2005/01/13

- Bridging vs. Routing 2004/07/18

- An attempt to explain Xen networking 2006-02-01

- Xen and the Art of Consolidation (with bridging)

The Xen 4.3 release will feature initial integration of Open vSwitch based networking. Conceptually this is similar to a bridged configuration but rather than placing each vif on a Linux bridge instead an Open vSwitch switch is used. Open vSwitch supports more advance Software-defined Networking (SDN) features such as OpenFlow.

Setting up Open vSwitch networking

Set up openvswitch according to the Host Networking Configuration Examples.

If you want openvswitch to be the default, add the following line to your xl.conf file:

If you have given the openvswitch bridge a name other than xenbr0, you will need to update that default as well:

Alternately, you can specify the new script (and bridge, if necessary) in each config file by adding script=vif-openvswitch (and possibly bridge=ovsbr0) to the vifspec of individual vifs in config files. See xl-network-configuration.markdown for more information.

Attaching virtual devices to the appropriate switch

Xen 4.3 ships with a vif-openvswitch hotplug script which behaves similarly to the vif-bridge script, except that it attaches the VIF to an openvswitch switch (named via the VIF's bridge parameter).

In addition to naming the bridge the openvswitch hotplug script supports an extended syntax for the bridge optio which allows for VLAN tagging and trunking. That syntax is:

To add a vif to VLAN 102 on bridge xenbr0:

To add a vif to bridge xenbr1 trunked and receiving traffic for VLAN 101 and 202:

In a routed network configuration a point-to-point link is created between the backend domain (typically domain 0) and each domU virtual network interface. Traffic is then routed between these point-to-point links and the outside world using the backend domain's network routing functionality.

For a general discussion of network routing see the wikipedia page on the subject.

Because routes are created dynamically as domains are created it is usually necessary for each guest network interface to have a known static IP address.

Setting up routing on the host

The recommended method for configuring networking is to use your distro supplied network configuration tools as described in Host Configuration/Networking.

Prior to Xen 4.1 when xend started up it would run the network-route script which perform the necessary configuration. However this mechanism was fragile and prone to breaking and therefore is no longer recommended.

The XL toolstack will never modify the network configuration and expects that the administrator will have configured the host networking appropriately. Check out this XL example.

Associating routes with virtual devices

When domU starts up, the vif-route script is run for each virtual device vifDOMID.DEVID. This script sets up routing for that device by

- Adding an IP address to the device. This address is largely arbitrary but required in order that the interface can be involved in routing. By default domain 0's IP address is used.

- Brings up the device.

- Adds a host static route for the interfaces IP address as specified in domU config file routing traffic to the vifDOMID.DEVID interface.

The IP address associated with a virtual network interface should be specified in the domain configuration file using the ip configuration key.

or

or for multiple devices:

More information on vif-route can be found here.

Network Address Translation or NAT is a form of routing which gives each guest VIF its own IP address on a private/internal network, often using RFC1918 addresses, and performs address translation at the router/firewall (e.g. domain 0) to connect the entire private network to the rest of the network via a single public IP address.

NAT is sometimes also called 'IP masquerading'.

Setting up NAT on the host

Setting up NAT is similar to configuring Routing as described above with the most obvious difference being that one should enable NAT in the backend domain.

The recommended method for configuring networking is to use your distro supplied network configuration tools as described in Host Configuration/Networking.

Prior to Xen 4.1 when xend started up it would run the network-nat script which perform the necessary configuration. However this mechanism was fragile and prone to breaking and therefore is no longer recommended.

The XL toolstack will never modify the network configuration and expects that the administrator will have configured the host networking appropriately. Check out this XL example.

Virtual Device Configuration

In a NAT'd configuration virtual devices are given IP addresses on a private network, typically an RFC1918 internal network. Guests may either be configured statically with addresses in the chosen network space or you can chose to run a DHCP server within that network (perhaps on the host itself) to provide addresses to guests.

When domU starts up, the vif-nat script is run for each virtual device vifDOMID.DEVID. If the ISC DHCP server is install then this script will attempt to dynamically reconfigure the DHCP service to serve up entries for the mac and ip address configuration keys in the guest configuration file. This is specific to the ISC DHCP servers configuration file syntax so if you are using a different DHCP server or simply want to manage the DHCP server yourself then you should disable the vif-nat script (which seems like a good idea, since automatic editing of the DHCP configuration is bound to be fragile).

Multiple tagged VLANs can be supported by configuring 802.1Q VLAN support into the backend domain (typically domain 0).

Once configured according to Host Configuration/Networking then the VLAN devices can be treated like any other device and used for either routing or bridging.

Likewise bonding (or even VLANs over bonding etc) can also be created by following distribution specific documentation and treating the resulting device as normal.

By combining the above with the networking capabilities of the host OS it is possible to create more complex configurations to suit various different requirements.

- Virtual network using a brouter.

- This configuration uses a bridge with no physical device shared by the guests. The bridge an IP address in domain 0 which is then use routed (or even NATed) to the external network (hence bridged router). See 'Xen3 and a Virtual Network' for a more complete description of this type of configuration.

The following attempt to show some common networking topologies used with Xen. See Network Configuration Examples (Xen 4.1+) for examples of how to achieve these configurations using distribution provided tools.

Standard Bridged Networking Architecture

Notes:

- xenbrX has an active address, which is used by dom0 to communicate with outside.

Xen Networking with VLANs

Notes:

- With this configuration, DomUs are completely unaware of the fact that they are utilizing a VLAN, all the work is done within the bridges in Dom0.

- Dom0 is aware of the traffic within the VLAN, because it has an active address on the xenbrX interfaces. To prevent it, don't give the xenbrX an active address, but configure a extra interface for management.

- There are two things may need to be configured:

- If your ethernet card does not natively support VLAN tags, you will have to set the maximum MTU to 1496 to make room for the tag. With command:

- With the DomUs bridged to VLAN interfaces, some optimizations need to be disabled or tcp and udp connections will fail. This is done by disabling transmit checksum offloading:

Xen Networking with bonding

Xen Networking with vlan on bonding

Notes:

- The connections at the top are switch ports - probably on 2 switches with an ISL

- bond0 has eth0 and eth1 ; bond1 has eth2 and eth3

- In the VMs eth0 maps to bond0.100 and eth1 maps to bond1.200

- Protocols suggest a service VLAN (100) and a mgmt VLAN (200)

| Needs Review Important page: Some parts of page are out-of-date and needs to be reviewed and corrected! |

About

These drivers allow Windows to make use of the network and block backend drivers in Dom0, instead of the virtual PCI devices provided by QEMU. This gives Windows a substantial performance boost, and most of the testing that has been done confirms that. This document refers to the new WDM version of the drivers, not the previous WDF version. Some information may apply though.

I was able to see a network performance improvement of 221mbit/sec to 998mb/sec using iperf to test throughput. Disk IO, testing via crystalmark, improved from 80MB/sec to150MB/sec on 512-byte sequential writes and 180MB/sec read performance.

With the launch of new Xen project pages the main PV driver page on www.xenproject.org keeps a lot of the more current information regarding the paravirtualization drivers.

Supported Xen versions

Gplpv >=0.11.0.213 were tested for a long time on Xen 4.0.x and are working, should also be working on Xen 4.1.

Gplpv >=0.11.0.357 tested and working on Xen 4.2 and Xen 4.3 unstable.

05/01/14 Update:

The signed drivers from ejbdigital work great on Xen 4.4.0. If you experience a bluescreen while installing these drivers, or after a rebootafter installing them, please try adding device_model_version = 'qemu-xen-traditional'. I had an existing 2008 R2 x64 system that consitently failed with a BSOD afterthe gpl_pv installation. Switching to the 'qemu-xen-traditional' device model resolved the issue. However, on a clean 2008 R2 x64 system, I did not have to makethis change, so please bear this in mind if you run into trouble.

I do need to de-select 'Copy Network Settings' during a custom install of gpl_pv. Leaving 'Copy network settings' resulted in a BSOD for me in 2008R2 x64.

I run Xen 4.4.0-RELEASE built from source on Debian Jessie amd64.

PV drivers 1.0.1089 tested on windows 7 x64 pro SP1, dom0 Debian Wheezy with xen 4.4 from source and upstream qemu >=1.6.1 <=2.0.

Notes: - upstream qemu version 1.6.0 always and older versions in some cases have critical problem with hvm domUs not related to PV drivers. - if there are domUs disks performance problem using blktap2 disks is not PV drivers problem, remove blktap2 use qdisk of upstream qemu instead for big disks performance increase (mainly in write operations)

Supported Windows versions

In theory the drivers should work on any version of Windows supported by Xen. With their respective installer Windows 2000 and later to Windows 7, 32 and 64-bit, also server versions. Please see the release notes with any version of gpl_pv you may download to ensure compatibility.

I have personally used gpl_pv on Windows 7 Pro x64, Windows Server 2008 x64, Windows Server 2008 R2 x64 and had success.

Recently I gave Windows 10 a try under Xen 4.4.1 (using Debian Jessie). The paravirtualization drivers still work. The drivers have not been installed from scratch but have been kept during the Windows Upgrade from Windows 7 to Windows 10.

Building

Sources are now available from the Xen project master git repository:

In addition you will need the Microsoft tools as described in the README files. The information under 'Xen Windows GplPv/Building' still refers to the old Mercurial source code repository and is probably dated.

Downloading

New, Signed, GPL_PV drivers are available at what appears to be the new home of GPL_PV athttp://www.ejbdigital.com.au/gplpv

These may be better than anything currently available from meadowcourt or univention.

Older binaries, and latest source code, are available from http://www.meadowcourt.org/downloads/

- There is now one download per platform/architecture, named as follows:

- platform is '2000' for 2000, 'XP' for XP, '2003' for 2003, and 'Vista2008' for Vista/2008/7

- arch is 'x32' for 32 bit and 'x64' for 64 bits

- 'debug' if is build which contains debug info (please use these if you want any assistance in fixing bugs)

- without 'debug' build which contains no debug info

Signed drivers

Newer, signed, GPL_PV drivers are available at what appears to be the new home of GPL_PV at http://www.ejbdigital.com.au/gplpv

You can get older, signed, GPLPV drivers from univention.

Signed drivers allow installation on Windows Vista and above (Windows 7, Windows Server 2008, Windows 8, Windows Server 2012) without activating the testsigning.

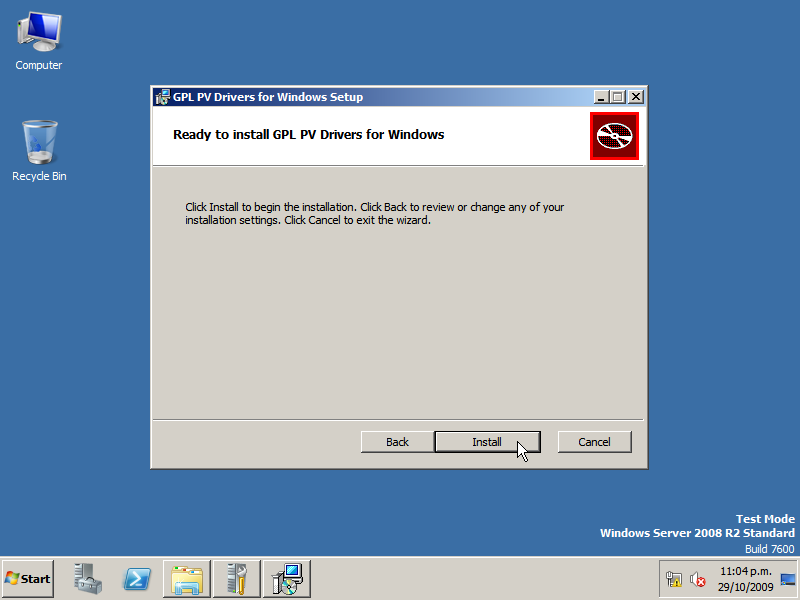

Installing / Upgrading

Once built (or downloaded for a binary release), the included NSIS installer should take care of everything. See here for more info, including info on bcdedit under Windows 2008 / Vista.

/! Please definitly visit the link above which links to /Installing . It holds information to not crash your Installation. It concerns the use of the /GPLPV boot parameter.

Using

Previous to 0.9.12-pre9, '/GPLPV' needed to be specified in your boot.ini file to activate the PV drivers. As of 0.9.12-pre9, /NOGPLPV in boot.ini will disable the drivers, as will booting into safe mode. With 'shutdownmon' running, 'xm shutdown' and 'xm reboot' issued from Dom0 should do the right thing too.

In your machine configuration, make sure you don't use the ioemu network driver. Instead, use a line like:

vif = []

Also fixed MAC address can be set,useful to the risk of reactivation of a license for Windows.

Known Issues

This is a list of issues that may affect you, or may not. These are not confirmed issues that will consistently repeat themselves. An issuelisted here should not cause you to not try gpl_pv in a safe environment. Please report both successes and failures to the mailing list, it all helps!

- An OpenSolaris Dom0 is reported not to work, for reasons unknown.

- Checksum offload has been reported to not work correctly in some circumstances.

- Shutdown monitor service in some cases is not added, and must be added manually.

- Network is not working after restore with upstream qemu, workaround for now is set fixed mac address in domUs xl cfg file.

- Installing with 'Copy Network Settings' may result in a blue screen.

- A blue screen may result if you are not using the traditional qemu emulator.

PLEASE TEST YOUR PERFORMANCE USING IPERF AND/OR CRYSTALMARK BEFORE ASSUMING THERE IS A PROBLEM WITH GPL_PV ITSELF

Note: I was using pscp to copy a large file from another machine to a Windows 2008 R2 DomU machine and was routinely only seeing 12-13MB/sec download rate. I consistentlyhad blamed windows and gpl_pv as the cause of this. I was wrong! Testing the network interface with iperf showed a substantial improvement after installing gpl_pv and thedisk IO showed great performance when tested with CrystlMark. I was seeing a bug in pscp itself. Please try to test performance in a multitude of ways before submittinga complaint or bug report.

Using the windows debugger under Xen

Set up Dom0

- Change/add the serial line to your Windows DomU config to say serial='pty'

- Add a line to /etc/services that says 'windbg_domU 4440/tcp'. Change the domU bit to the name of your windows domain.

- Add a line to /etc/inetd.conf that says 'windbg_domU stream tcp nowait root /usr/sbin/tcpd xm console domU'. Change the domU bit to the name of your domain. (if you don't have an inetd.conf then you'll have to figure it out yourself... basically we just need a connection to port 4440 to connect to the console on your DomU)

- Restart inetd.

Set up the machine you will be debugging on - another Windows machine that can connect to your Dom0 from.

- Download the windows debugger from Microsoft and install.

- Download the 'HW Virtual Serial Port' application from HW Group and install. Version 3 appears to be out, but i've only ever used 2.5.8.

Boot your DomU

- xm create DomU (or whatever you normally use to start your DomU)

- Press F8 when you get to the windows text boot menu and select debugging mode, then boot. The system should appear to hang before the splash screen starts

Start HWVSP

- Start the HW Virtual Serial Port application

- Put the IP address or hostname of your Dom0 in under 'IP Address'

- Put 4440 as the Port

- Select an unused COM port under 'Port Name' (I just use Com8)

- Make sure 'NVT Enable' in the settings tab is unticked

- Save your settings

- Click 'Create COM'. If all goes well it shuold say 'Virtual serial port COM8 created' and 'Connected device '

Run the debugger

- Start windbg on your other windows machine

- Select 'Kernel Debug' from the 'File' menu

- Select the COM tab, put 115200 in as the baud rate, and com8 as the port. Leave 'Pipe' and 'Reconnect' unticked

- Click OK

- If all goes well, you should see some activity, and the HWVSP counters should be increasing. If nothing happens, or if the counters start moving and then stop, quit windbg, delete the com port, and start again from 'Start HWVSP'. Not sure why but it doesn't always work the first time.

Debugging

- The debug output from the PV drivers should fly by. If something isn't working, that will be useful when posting bug reports.

- If you actually want to do some debugging, you'll need to have built the drivers yourself so you have the src and pdb files. In the Symbol path, add '*SRV*c:websymbols*http://msdl.microsoft.com/download/symbols;c:path_to_sourcetargetwinxpi386'. change winxpi386 to whatever version you are debugging.

- Actually using the debugger is beyond the scope of this wiki page :)

Developers

Download Xen Gpl Pv Driver Developers Motherboards Drivers

- xenpci driver - communicates with Dom0 and implements the xenbus and event channel interfaces

- xenhide driver - disables the QEMU PCI ATA and network devices when the PV devices are active

- xenvbd driver - block device driver

- xennet driver - network interface driver

- xenstub driver - provides a dummy driver for vfb and console devices enumerated by xenpci so that they don't keep asking for drivers to be provided.